The Journey Robot

David P. Anderson and

Mike Hamilton

An Autonomous Six Wheel Off-Road Robot with Differential

All-Wheel Drive, All-Wheel Independent Suspension and Static/Dynamic Stability.

25 September 2014:

jBot Seeking Orange Traffic Cones

31 March 2008:

DPRG Outdoor Robot Challenge

26 March 2007:

jBot's Programming

27 July 2006:

jBot's IMU Odometry

08 January 2006:

"A Geocaching Robot"

12 August 2005:

jBot's Build Log

This website was

"slashdotted" on

01 August 2005

Journey Robot is featured in the O'Reilly book

The Makers.

The

Journey Robot is an outdoor robot designed to run offroad in unstructured environments. It was inspired by

the

DARPA Grand Challenge and built as a prototype vehicle for that competition, in collaboration with Ron Grant

and Steve Rainwater of the

Dallas Personal Robotics Group, Mike Hamilton,

Mark Sims and myself.

jBot is a fully autonomous, self-guided, mobile robot, capable of navigating on its own

to an arbitrary set of waypoints while avoiding obstacles along the way.

The concept for the robot was hashed out over the summer of 2004 in a series of RBNO meetings at the warehouse

of the DPRG.

The platform was designed by Mike

during the fall and winter of 2004 and I built the vehicle

in my

home machine shop in the spring of 2005. jBot moved under its own power for the first time on

April 9, 2005 (3.5M mp4),

The robot was designed to meet the twin requirements of off-road stability and zero turning radius. The

present platform is a

differentially steered 6-wheel vehicle, with all-wheel drive and all-wheel independent suspension.

Power is provided by two

Pittman 24 Volt DC gearhead motors

and a 24 volt, 4 amp/hour NiMh battery

pack. Total robot weight is about 20 lbs.

The robot's tires, wheels, and suspension were scavanged from a

Traxxas E-MAXX 4-wheel-drive 1/10 scale R/C

monster truck.

These are very

nicely engineered suspension and drive parts, with a

full set of factory parts available, as well as lots of after-market components from various

manufacturers. Mike designed a custom drive train to interface with the suspension parts, giving us an all-wheel-drive

vehicle with zero turn radius and full independent suspension. The only aftermarket parts used were the addition

of

MIP CVD steel constant velocity joint drive shafts to replace the

factory plastic parts, which were

not able to handle the torque, and a set of aftermarket

Road Rage tires.

The robot's "brain" is a Motorola 68332 micro-controller running in one of Mark Castelluccio's

Mini Robomind controller boards. The current suite of

sensors includes quadrature shaft encoders on the motors, an array of four Polaroid

(Senscomp)

sonar transducers, a

Microstrain 3-axis inertial measurement unit, and a

Garmin Etrex GPS receiver. A pair of

Devantech H-Bridge intelligent electronic speed controllers are used to drive the DC gearhead motors.

The robot's "brain" is a Motorola 68332 micro-controller running in one of Mark Castelluccio's

Mini Robomind controller boards. The current suite of

sensors includes quadrature shaft encoders on the motors, an array of four Polaroid

(Senscomp)

sonar transducers, a

Microstrain 3-axis inertial measurement unit, and a

Garmin Etrex GPS receiver. A pair of

Devantech H-Bridge intelligent electronic speed controllers are used to drive the DC gearhead motors.

The robot's operating system

and control and navigation software were developed and written by the author based on algorithms originally developed for

the

SR04 and the

nBot robots.

The robot uses a small cooperative multi-tasking executive to run a set of behaviors. The

behaviors are prioritized in a behavior-based or "subsumption" architecture.

The powerful TPU subsystem of the Motorola 68332 provides interrupt-driven hardware interfaces for

user I/O, motor drivers,

quad encoders, PID speed controller, sonar array, and radio PWM decode for a remote emergency override.

It also supplies four RS-232 communication channels for the control terminal,

IMU, GPS, and an optional serial video camera.

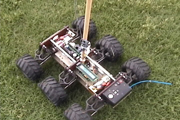

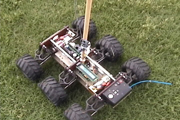

Here's a nice potrait of the assembled jBot chassis. It was taken by Dave Ellis on stage at the

Dallas Science Place April 2005

DPRG monthly meeting. This was before the electronics and sensors were installed on the platform.

Click on the image for a high-resolution version.

MPEG Videos of jBot in Action

The following videos feature the robot navigating to a set of waypoints

using inertial navigation rather than GPS. They are in chronological order, oldest first, and, as sensors and capabilities

were added

to the basic platform, the robot gets "smarter" in the later, more recent videos.

Inertial Navigation

The wooden mast in the pictures below is a temporary addition while I test the

inertial measurement unit. The IMU is the black box mounted on top of the mast. These videos test the

inertial guidance algorithms in the absence of any other obstacle detection and avoidance sensors.

Autonomous Pied Piper (9M mp4). I took the robot to a local park to test the new position/location software by

having the robot autonomously drive around a 100 foot square, clockwise and counter clockwise, like the Borenstein UMBMark.

IMU Odometry (15M mp4). 100 feet out and back. Note the skid marks in the dirt where the robot turns around. It had already returned to

the same spot three consecutive times before I thought to turn on the video

camera.

Odometry over curbs (15M mp4). Using the white and red stripes painted on a parking lot as giant graph paper for testing the robot position error after climbing over a curb. And also after

climbing over 2 curbs (6M mp4),

Navigation over Uneven Terrain

Odometry over rough terrain (5M mp4). Short back and forth over broken ground, 20 feet.

Odometry over more rough terrain (6M mp4). 100 feet out and back over broken ground.

Obstacle Detection and Path Planning

Sonar array

An array of 4 SensComp sonar detectors gives the robot total frontal coverage of about 60 degrees out to about

30 feet. These

sensors drive multiple behaviors including obstacle avoidance, path planning, and perimeter following. In the

following videos, the robot is navigating to a single waypoint and back, as before, with the addition of these behaviors.

Navigating with Sonar and Odometry (12 Meg). Attempting to drive in a straight line to a point 100 feet away and return, repeatedly, as in the previous videos, but now through a garden on the campus at SMU, using the sonar array to avoid obstacles along the way.

Here is a bigger version (24M mpeg)

of the complete run. Note that on the last leg one of the sensors malfunctions (bad solder connection) and

the robot appears to carefully home in on (and climb) a tree and two light poles. In these cases the "escape"

behavior is triggered when the robot senses an approaching unstable angle of the platform base.

Following a perimeter(6.2M) The robot uses it's sonar array to follow

the perimeter of part of the Law School complex at SMU.

Navigating Through the Woods

Navigating through the woods (10 Meg) First of four legs of navigation through

the woods at a tent-campsite on Garza-Little Elm Reservoir. The robot is seeking to

a point 200 feet away through the woods, and then back to the starting place, which it

repeats 4 times for a total exceeding 1600 feet, about 1/3 mile. Total accumulated

error for the entire test was about 20 feet, rought 1.25% position error. For those with

the bandwidth and patience,

here (50 Meg)

are all four legs of the complete test.

Here are two "hat trick" videos. In each, the robot is set to seek through the woods to a point 500 feet

away and return to the starting point, which is marked with a straw hat. In the

first hat trick video (19M mp4) the robot returns within about 5 feet of the hat.

For the

second hat trick video (21M mp4) the robot returns directly to, and runs over,

the hat.

SteadyCam Videos

I built one of

these steadycam supports for my video camera and it works remarkably well for

chasing jbot through the woods. Here are my first attempts at using it.

steadycam 1 (20M mp4) is a trip through the woods to

a point 500 feet away and back, using the steadycam to follow the robot.

steadycam 2 (20M mp4) is a similar trip through the

woods, but on this one I remembered to "drop the hat" so as to have a reference mark

for the origin. Both trips are over 1000 feet with a total accumulated position

error of about 5 feet (I think).

New Shoes: jBot has a new set of 7.5 inch

IMEX "Rock Crawler" tires which give it a little

more ground clearance and top speed. These tires don't do as well in deep grass as the others.

But on concrete and gravel and curbs they are a nice improvement.

Here is a

video of jBot climbing the stairs (20M mp4) of Dallas Hall on the campus at SMU. Here is a

low resolution stair-climbing video (5M mp4).

The video is a composite of two runs, one up the stairs and one down. There are a few frames missing where I kicked the camera near the top of the stairs (still getting used to the steadycam mount).

The original frames are here (23M mp4) but they include a very

annoying noise burst on the audio track, the reason for editing them out. I include them here

at the suggestion of our friends on slashdot,

who had some doubts. It also has a funnier ending.

jBot in the Colorado Rockies

jBot spent part of the 2005 summer in the Colorado Rocky Mountains, clearly the most challenging out-door terrain that

the robot has encountered, and "Papa" had to bail it out of trouble more than a few times. Here are a couple of videos

of the robot navigating through the forest, and along a forest service road.

jBot in the high country (9.3M mp4). The robot navigates

over and around obstacles in the Colorado Rockies at about 11,000 feet elevation, to a way point 500 feet away,

and back to the starting point (minus about 8 feet).

jBot in the high country (9.3M mp4). The robot navigates

over and around obstacles in the Colorado Rockies at about 11,000 feet elevation, to a way point 500 feet away,

and back to the starting point (minus about 8 feet).

jBot climbs a hill (11M mp4). The robot navigates

up a steep hill over rocky terrain, and back down again.

jBot climbs a hill (11M mp4). The robot navigates

up a steep hill over rocky terrain, and back down again.

jBot in the high country (16M mp4). The robot navigates

over and around obstacles in the Colorado Rockies to a way point 500 feet away,

and back to the starting point (minus about 12 feet). Deus ex machina comes to the rescue when the robot flips over

on it's back.

jBot in the high country (16M mp4). The robot navigates

over and around obstacles in the Colorado Rockies to a way point 500 feet away,

and back to the starting point (minus about 12 feet). Deus ex machina comes to the rescue when the robot flips over

on it's back.

jBot on the road (10M mp4). The robot navigates to the top

of a steep forest service road and back down. The curious circling at the top of the road are a result, I think, of

the camera man (cough cough) getting in the robot's way as it attempts to reach its waypoint.

jBot on the road (10M mp4). The robot navigates to the top

of a steep forest service road and back down. The curious circling at the top of the road are a result, I think, of

the camera man (cough cough) getting in the robot's way as it attempts to reach its waypoint.

Getting Unstuck

Unlike my other robots, the Journey Robot has no

physical bumper that it can use for collision detection. A physical bumper is problematic given the design of the

6-wheel drive and independent suspension. Further, most objects that jBot collides with, it just climbs over. So the

definition of a collision becomes more complex in an offroad environment.

Other common methods for detecting a collision include monitoring the motor current for excesses, or using some sort

of accelerometer to deduce the platform's motion. Both of these approaches are difficult for an offroad vehicle

which is subject to shocks and sudden large torque requirements in the normal course of its operation.

For jBot, the basic question is not if platform has had a collision, but rather whether the robot is stuck. This could

happen, for example, if the robot is high-centered, has become entangled in brush or barbed wire, or has fallen into

a crevice. The robot has a suite of behaviors for escaping from entrapment, but the first step is to know that it

is trapped.

jBot uses three different methods to detect states in which it must deploy its escape strategies. The first just

monitors the wheels to be certain they are turning (at more-or-less the speed that is being requested). This is somewhat

like monitoring the motor current and detects when the wheels are jammed and unable to turn. The second method uses

the robot's angle sensors to know when the robot platform is approaching a dangerous angle, either too steep

or canted too far left or right. It then uses the orientation angle and sonar array to attempt to choose the correct

method of recovery. That method is illustrated in a couple of clips from the following video.

jBot uses three different methods to detect states in which it must deploy its escape strategies. The first just

monitors the wheels to be certain they are turning (at more-or-less the speed that is being requested). This is somewhat

like monitoring the motor current and detects when the wheels are jammed and unable to turn. The second method uses

the robot's angle sensors to know when the robot platform is approaching a dangerous angle, either too steep

or canted too far left or right. It then uses the orientation angle and sonar array to attempt to choose the correct

method of recovery. That method is illustrated in a couple of clips from the following video.

The third method seems to work very reliably in determining that the robot is trapped. It takes advantage of the fact

the jBot tracks its movements with an inertial orientation sensor and also with more traditional wheel odometry

. The

robot compares the rate-of-change of the orientation angle calculated from the wheels to the rate-of-change of

the orientation angle measured by the IMU. If they are very different, and continue to be different for several

seconds, it signals that the robot is trapped.

This works because the robot, when trapped, is almost always

trying to turn in order to escape, and because of the light wheel loading, the wheels usually slip and are not jammed.

The amount of turn that the wheels

measure and that the IMU measure are

therefore very different, and the robot can know that it is stuck. Robots without a fancy IMU orienation

sensor might apply this same technique with the addition of a single vertical rate gyroscope.

jBot getting unstuck (48M mp4).

This video is

a collection of clips of the robot detecting that it is trapped, and performing escape maneuvers. In each case, the

robot detects that it is stuck using one or all of the three methods outlined above.

Here is a higher-res version of

jBot getting unstuck (202M) mp4)

Here is a low-res mp4(16M) and windows media (5.6M wmv) version of the same video,

and here is an even lower-res real media (4.8M rm) version.

Journey navigation and perimeter following.

I have been studying the problem of dealing with dead-end cul de sacs in route to a waypoint. In these cases, the

navigation technique of the robot simply steering the shortest path toward the destination results in the robot

being trapped in the cul de sac. The more generic difficulty is described in some robotic text books as the "concave

vs. convex" maneuvering problem, sometimes also referred to as a navigation "singularity."

jBot in its current enlightenment attempts to solve this problem by switching between waypoint navigation mode and

perimeter following mode. When it "determines" that it is in a cul de sac, it switches to a perimeter or wall-following

mode, and continues in that mode until it "determines" that its path to the next waypoint is no longer blocked, at

which time it returns to waypoint navigation mode.

As might be obvious, the big problem here is how the robot "determines" when to switch between the two modes. I am still

working on this, but the current method is quite simple. The robot switches on wall-following when/if it finds itself

heading directly away from the target waypoint. And it switches back to waypoint mode when/if it finds itself heading

directly toward the target waypoing.

Circum-navigating the TI building (31M mp4). This image shows an ariel view of

the start and ending

waypoints for an attempted half mile trek across the campus of the Engineering School at the University of North Texas.

jBot is navigating to a single waypoint on the far side of the old TI building, and it starts the journey pointed

directly into a dead-end cul de sac. The video has lables where the robot switches between waypoint navigation and

wall-following modes.

Circum-navigating the TI building (31M mp4). This image shows an ariel view of

the start and ending

waypoints for an attempted half mile trek across the campus of the Engineering School at the University of North Texas.

jBot is navigating to a single waypoint on the far side of the old TI building, and it starts the journey pointed

directly into a dead-end cul de sac. The video has lables where the robot switches between waypoint navigation and

wall-following modes.

Here's a

higher-resolution (85M mp4) and

here is a low-res windows media (16M wmv) version of the same video,

and here is an even lower-res real media (9M rm) version.

UPDATE: 18 June 2021:

jBot @ TI Higher-Res with new lables.

Circum-navigating Dallas Hall (9.4M mp4). This ariel image is of the starting and ending

waypoints for a journey of 2000 feet across the campus at SMU, including a detour around Dallas Hall. After initally

thrashing around in the ground cover behind the building, jBot switches to wall-following mode and follows the perimeter

around to the front, where it returns to waypoint navigation mode.

Here is a low-res windows media (4.7M wmv) version of the same video,

and here is an even lower-res real media (2.4M rm) version.

This site was

"slashdotted" on

01 August 2005 at

9:08pm EDT.

At the height of the excitement our little

two-headed web server

smoothly sustained

about

50,000 hits and 27 GB of data per hour.

Comments on slashdot suggest that many readers thought

jBot was an R/C car, controlled by a human, and were

correspondingly

unimpressed, although a

few

perceptive

individuals seemed to

appreciate the effort.

The overall event lasted more than 40 hours, generating nearly a million hits and moving about 335

Gigabytes of data. Amazing.

My other robots

last update: 18 June 2021 dpa

(C) 2004-2021 David P. Anderson

The robot's "brain" is a Motorola 68332 micro-controller running in one of Mark Castelluccio's

Mini Robomind controller boards. The current suite of

sensors includes quadrature shaft encoders on the motors, an array of four Polaroid

(Senscomp)

sonar transducers, a

Microstrain 3-axis inertial measurement unit, and a

Garmin Etrex GPS receiver. A pair of

Devantech H-Bridge intelligent electronic speed controllers are used to drive the DC gearhead motors.

The robot's "brain" is a Motorola 68332 micro-controller running in one of Mark Castelluccio's

Mini Robomind controller boards. The current suite of

sensors includes quadrature shaft encoders on the motors, an array of four Polaroid

(Senscomp)

sonar transducers, a

Microstrain 3-axis inertial measurement unit, and a

Garmin Etrex GPS receiver. A pair of

Devantech H-Bridge intelligent electronic speed controllers are used to drive the DC gearhead motors.

jBot in the high country (9.3M mp4). The robot navigates

over and around obstacles in the Colorado Rockies at about 11,000 feet elevation, to a way point 500 feet away,

and back to the starting point (minus about 8 feet).

jBot in the high country (9.3M mp4). The robot navigates

over and around obstacles in the Colorado Rockies at about 11,000 feet elevation, to a way point 500 feet away,

and back to the starting point (minus about 8 feet).

jBot climbs a hill (11M mp4). The robot navigates

up a steep hill over rocky terrain, and back down again.

jBot climbs a hill (11M mp4). The robot navigates

up a steep hill over rocky terrain, and back down again.

jBot in the high country (16M mp4). The robot navigates

over and around obstacles in the Colorado Rockies to a way point 500 feet away,

and back to the starting point (minus about 12 feet). Deus ex machina comes to the rescue when the robot flips over

on it's back.

jBot in the high country (16M mp4). The robot navigates

over and around obstacles in the Colorado Rockies to a way point 500 feet away,

and back to the starting point (minus about 12 feet). Deus ex machina comes to the rescue when the robot flips over

on it's back.

jBot on the road (10M mp4). The robot navigates to the top

of a steep forest service road and back down. The curious circling at the top of the road are a result, I think, of

the camera man (cough cough) getting in the robot's way as it attempts to reach its waypoint.

jBot on the road (10M mp4). The robot navigates to the top

of a steep forest service road and back down. The curious circling at the top of the road are a result, I think, of

the camera man (cough cough) getting in the robot's way as it attempts to reach its waypoint.

jBot uses three different methods to detect states in which it must deploy its escape strategies. The first just

monitors the wheels to be certain they are turning (at more-or-less the speed that is being requested). This is somewhat

like monitoring the motor current and detects when the wheels are jammed and unable to turn. The second method uses

the robot's angle sensors to know when the robot platform is approaching a dangerous angle, either too steep

or canted too far left or right. It then uses the orientation angle and sonar array to attempt to choose the correct

method of recovery. That method is illustrated in a couple of clips from the following video.

jBot uses three different methods to detect states in which it must deploy its escape strategies. The first just

monitors the wheels to be certain they are turning (at more-or-less the speed that is being requested). This is somewhat

like monitoring the motor current and detects when the wheels are jammed and unable to turn. The second method uses

the robot's angle sensors to know when the robot platform is approaching a dangerous angle, either too steep

or canted too far left or right. It then uses the orientation angle and sonar array to attempt to choose the correct

method of recovery. That method is illustrated in a couple of clips from the following video.

Circum-navigating the TI building (31M mp4). This image shows an ariel view of

the start and ending

waypoints for an attempted half mile trek across the campus of the Engineering School at the University of North Texas.

jBot is navigating to a single waypoint on the far side of the old TI building, and it starts the journey pointed

directly into a dead-end cul de sac. The video has lables where the robot switches between waypoint navigation and

wall-following modes.

Circum-navigating the TI building (31M mp4). This image shows an ariel view of

the start and ending

waypoints for an attempted half mile trek across the campus of the Engineering School at the University of North Texas.

jBot is navigating to a single waypoint on the far side of the old TI building, and it starts the journey pointed

directly into a dead-end cul de sac. The video has lables where the robot switches between waypoint navigation and

wall-following modes.